There is a term the IT industry uses to define a hard drive crash: disaster. Backups are the primary tool for disaster recovery.

I try to address backups at every site I visit, mainly because in my experience, in the vast majority of cases, backups are not configured (or not behaving) the way they are expected to. This can be due to a hardware failure or a misunderstanding about the way backups were configured from the outset. On the flip-side of the coin, backups may be configured in such a way that it may not be immediately intuitive as to how to restore files from it if a disaster ever did occur.

I’ve seen situations where an external drive is attached to facilitate backups and it may have worked for some time, but after a few years, the drive no longer functions – but the backup software gives no warning indicating the failure. The user continues on with their life, blissfully unaware that their backups no longer back up.

Still others will install an external drive but mistakenly configure backups to store on the internal drive, which obviously defeats the purpose of the activity.

Simple, intuitive backup software is available – free in many cases even in enterprise applications. Hard drives are cheaper and have higher capacity than ever before.

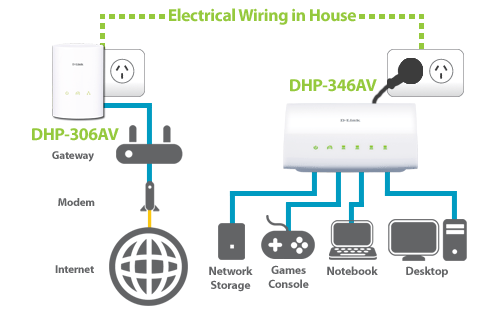

For homes or businesses, options such as offsite storage can address issues that local backups cannot — issues like fires, floods or earthquakes. For the price of a couple of cups of coffee (or one expensive one) each month, you can have the peace of mind that comes with data redundancy.

Take a good look at your backup designs and verify that they do what you think they do. Don’t find yourself on the wrong side of a disaster.

For those of you who wish to have managed or at the very least monitored offsite backup solutions, McLean IT Consulting offers great and affordable storage packages for homes or businesses, and we are notified of any failed backup jobs, enabling us to respond to issues in a timely manner and keep your data safe.